As organisations increasingly adopt generative AI tools, ensuring privacy and security becomes paramount. A new question that security and IT decision-makers face is whether sensitive data exposure alerts should be directed to IT security teams or employees.

The Role of Education and Responsible AI

Education is essential for fostering responsible AI adoption. According to a recent survey by Gartner, 85% of organisations acknowledge the need for improved AI governance and employee training to mitigate privacy risks. Employees must be aware of the implications of using generative AI tools, including potential privacy risks. By promoting responsible AI practices, organisations can create a culture of awareness and accountability.

Sending Sensitive Data Exposure Alerts to IT Teams: Pros and Cons

Pros:

- Expert Handling: IT teams possess the technical expertise to assess and address sensitive data exposures efficiently. They can implement necessary measures to mitigate risks without disrupting the workflow of employees.

- Centralised Control: Centralising sensitive data exposure alerts allows for coordinated management and ensures that all incidents are handled consistently and thoroughly. According to Atlassian, centralised IT incident management can reduce response times by up to 40%.

- Reduced Disruption: Filtering alerts through the IT team prevents employees from being overwhelmed with technical details, allowing them to focus on their core responsibilities.

Cons:

- Delayed Response: If IT teams are inundated with alerts, there might be delays in addressing sensitive data exposures, potentially leaving sensitive data exposed for longer periods.

- Lack of Awareness: Employees might remain unaware of sensitive data issues affecting their work, reducing their vigilance and possibly leading to more exposures. An Infosec Institute report found that 74% of security breaches are due to human error, underscoring the need for employee awareness.

- Over-Reliance on IT: Dependence on IT for all sensitive data concerns can create bottlenecks and slow down overall response times.

Sending Sensitive Data Exposure Alerts to Employees: Pros and Cons

Pros:

- Enhanced Awareness: Direct alerts to employees can increase their awareness of sensitive data issues, fostering a culture of responsibility and vigilance. In a Proofpoint 2021 study 80% of organisations said that security awareness training had reduced their staffs’ susceptibility to phishing attacks. That reduction doesn't happen overnight, but it can happen fast — with regular training being shown to reduce risk from 60% to 10% within the first 12 months.

- Immediate Action: Employees can take prompt action to address sensitive data exposures, reducing the risk of data breaches.

- Empowerment: Empowering employees to handle sensitive data alerts can lead to a more resilient organisation, where everyone plays a role in maintaining security.

Cons:

- Potential Confusion: Employees who are not well-versed in handling sensitive data alerts might become confused or panic, leading to ineffective responses.

- Inconsistent Handling: Without centralised management, responses to sensitive data exposures can be inconsistent, creating gaps in the security framework.

- Alert Fatigue: Frequent alerts can lead to desensitisation, where employees start ignoring them, reducing their effectiveness. Deloitte's research indicates that alert fatigue can reduce the effectiveness of security programs by up to 50%.

So how can we address that dilemma?

Maintain Privacy and Engagement- the role of AONA AI

It’s simply not enough to have an AI policy in place. Alone a policy will not protect a business from threats – partly because IT security policies are not always followed by the staff that they are designed for, and partly because they cannot cover every possible risk.

At Aona AI, we offer a comprehensive solution for monitoring sensitive data exposures in generative AI prompts & addressing the gap with the employee engagement.

Benefits of Aona AI:

- Real-Time Monitoring: Aona AI continuously monitors all prompts for sensitive data exposures, ensuring that any issues are flagged immediately. This real-time capability can reduce the time to detect and respond to incidents by 60%.

- Actionable Insights: The platform provides actionable insights to both IT teams and employees, ensuring that sensitive data exposures are handled effectively.

- Educational Resources: Aona AI includes educational resources to help employees understand the importance of privacy and how to maintain it, promoting responsible AI use.

- Balanced Alerts: By customising alert settings, organisations can ensure that critical alerts are directed to IT teams while keeping employees informed and engaged without overwhelming them.

Striking the Right Balance

The optimal approach might involve a hybrid model where critical sensitive data exposure alerts are sent to IT teams for expert handling, while more general alerts are communicated to employees with clear, actionable guidance.

This ensures that serious issues are addressed promptly by experts while fostering a culture of awareness and responsibility among employees.

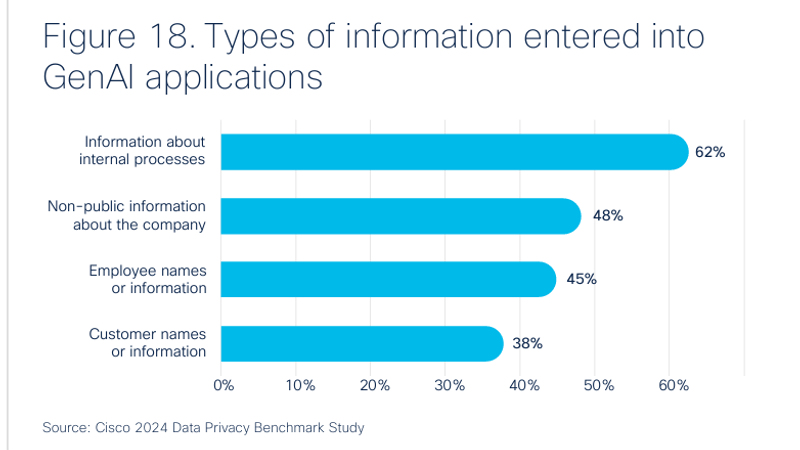

Education and responsible AI practices remain key. By investing in regular training and awareness programs, organisations can ensure that employees are better prepared to understand & avoid using company non-public information when using a generative AI.

Solutions like Aona AI can support these efforts by providing the tools and resources needed to maintain security and engagement.

Conclusion

Deciding whether to send sensitive data exposure alerts to IT teams or employees involves weighing the pros and cons of each approach.

By fostering a culture of education and responsible AI adoption and leveraging tools like Aona AI, security and IT decision-makers can enhance their organisation's privacy posture. This balanced approach ensures that sensitive data exposures are addressed effectively while keeping employees engaged and informed.

Through careful consideration and the use of advanced solutions, organisations can navigate the complexities of privacy in the age of generative AI, ensuring a secure and responsible AI adoption journey.

Want to learn more about Aona AI ? Request a demo