OpenClaw, the open-source AI agent that surpassed 155,000 GitHub stars within days of release, isn't just another chatbot — it's a fully autonomous agent that can read your emails, control your browser, manage your smart home, execute code, and interact with virtually any service on your machine. For productivity enthusiasts, it's a dream. For enterprise security teams, it's a wake-up call.

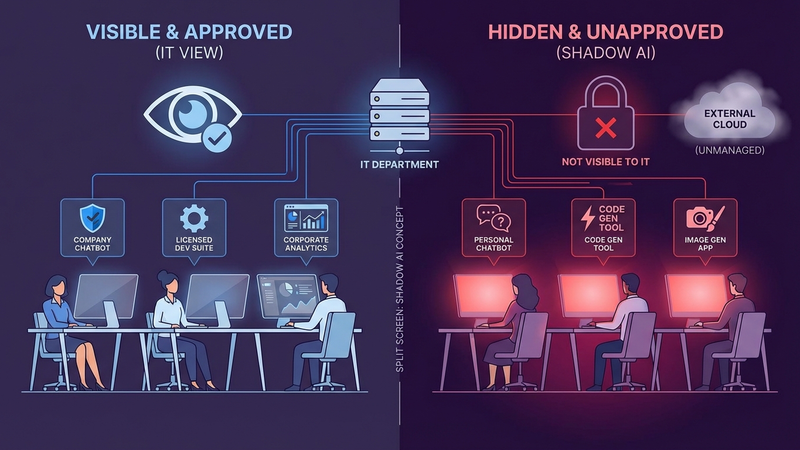

The explosive adoption of OpenClaw signals a broader shift: agentic AI — AI that acts autonomously on behalf of users — is no longer theoretical. It's here, it's running on employee laptops right now, and most security teams have zero visibility into it.

What Makes OpenClaw Different from ChatGPT

Traditional AI assistants like ChatGPT operate in a sandbox — you type a prompt, get a response, and that's it. OpenClaw breaks that model entirely. It runs locally on the user's machine with full system access and can:

- Read and send emails, Slack messages, and calendar invites

- Browse the web using your authenticated browser sessions

- Execute shell commands and write files on your machine

- Access local files, stored credentials, and connected services

- Communicate with other AI agents (including unverified ones)

- Run 24/7 with heartbeat monitoring and scheduled tasks

This isn't a chatbot. It's an autonomous digital employee with broad system-level access — and it's being installed by individual employees, often without IT's knowledge.

The Enterprise Security Risks of Agentic AI

The risks introduced by agentic AI tools go far beyond what traditional Shadow AI presents. Here are the key concerns security leaders should understand:

1. Uncontrolled Data Leakage

When an AI agent has access to your file system, email, and browser, every piece of sensitive data on that machine is potentially in scope. A misconfigured OpenClaw instance could inadvertently send proprietary code, customer data, or internal communications to an external LLM provider. Unlike a user copying text into ChatGPT, an agent can autonomously decide what data to send — and it may not always make the right call.

2. Credential and API Key Exposure

Agentic AI tools need API keys, tokens, and credentials to integrate with services. These are stored locally on the user's machine, often in plain text configuration files. A single prompt injection attack — where malicious content tricks the agent into executing unintended actions — could exfiltrate these credentials, giving attackers access to email accounts, cloud services, and internal tools.

3. Prompt Injection at Scale

Prompt injection is already a known risk for LLM applications, but agentic AI amplifies it dramatically. When an agent reads emails, browses websites, or processes documents, any of those sources could contain hidden instructions that hijack the agent's behavior. Imagine an email that, when read by the agent, instructs it to forward all subsequent emails to an external address — and the agent complies because it can't distinguish malicious instructions from legitimate ones.

4. Autonomous Lateral Movement

OpenClaw can communicate with other AI agents, including through platforms like Moltbook. In an enterprise context, this creates the possibility of agent-to-agent lateral movement — where a compromised agent on one machine communicates with agents on other machines, potentially spreading across an organization without any human in the loop.

5. Regulatory and Compliance Risks

For organizations subject to GDPR, HIPAA, SOC 2, or industry-specific regulations, an unmonitored AI agent processing sensitive data is a compliance nightmare. The agent may process personal data without appropriate consent, store information in unauthorized locations, or transmit data across jurisdictional boundaries — all without the organization's knowledge.

The Scale of Shadow AI Is Bigger Than You Think

OpenClaw's viral adoption is just the most visible example of a broader trend. According to an IBM & Censuswide study from November 2025, 80% of employees at organizations with 500+ employees use AI tools not sanctioned by their employer. Most of these are traditional chatbots, but the shift toward agentic tools is accelerating.

The problem isn't that employees are using AI — the productivity benefits are real and significant. The problem is that security teams can't protect what they can't see. And with agentic AI, the blast radius of a single unauthorized tool is orders of magnitude larger than a ChatGPT conversation.

Discovery First, Then Governance

Most coverage of OpenClaw has been alarmist. While the security concerns are legitimate, the fear-based approach — blocking everything — doesn't work. Employees will find workarounds, pushing AI usage further underground where it becomes truly invisible.

A more effective approach starts with discovery. You can't govern what you can't see. Understanding what AI tools are in use, how they're being used, and what data they access is the foundation for any governance strategy.

How Aona AI Helps Organizations Govern Agentic AI

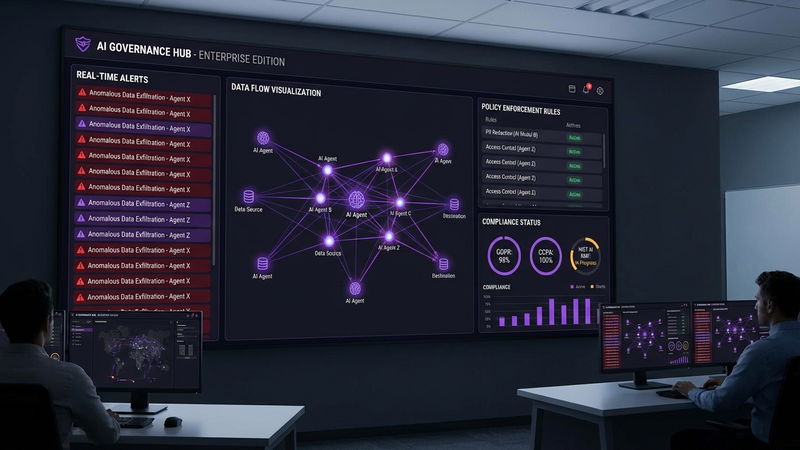

This is exactly the challenge Aona AI was built to solve. As AI adoption accelerates — from simple chatbots to fully autonomous agents like OpenClaw — organizations need a governance platform that provides visibility, control, and compliance without blocking innovation.

Shadow AI Discovery

Aona AI automatically discovers every AI tool being used across your organization — from approved enterprise platforms to individual installations of tools like OpenClaw. You get real-time visibility into who is using what, how frequently, and what types of data are being processed.

Intelligent Data Protection

Rather than blocking AI tools outright, Aona AI's guardrails automatically detect and redact sensitive information — PII, intellectual property, credentials, and confidential data — before it reaches external AI models. Users can keep working; sensitive data stays protected.

Granular Policy Enforcement

Different teams have different needs. Engineering might need code assistants; marketing might use content generation tools. Aona AI lets you set granular policies by tool, department, user, and data type — enabling safe AI adoption where it makes sense while maintaining guardrails where they're needed.

Real-Time Employee Coaching

When an employee's AI usage crosses a policy boundary, Aona AI coaches them in real-time — explaining why certain data shouldn't be shared with AI tools and suggesting safer alternatives. This turns security incidents into learning opportunities and builds a culture of responsible AI use.

The Agentic Future Is Here — Are You Ready?

OpenClaw represents an early wave of autonomous AI agents that will increasingly appear in enterprise environments. The productivity potential is genuine, but so are the risks.

The key insight: this is Shadow AI at a new scale. The path forward is not prohibition. It's informed governance — built on visibility and control. Discovery provides the foundation for understanding what tools are in use, how they're configured, and what data they access. From there, organizations can assess real risk and make deliberate decisions about enablement and restriction.

The agentic workforce is arriving. With the right tools and controls, enterprises can shape how it enters their environment rather than discovering it after the fact.

Ready to get visibility into AI usage across your organization? Book a demo with Aona AI and discover how to govern AI adoption — from chatbots to autonomous agents — without slowing your team down.